This author had many clients & associates who needed / wanted video to help promote their product or service. And most recently, a need to demonstrate -- "tease" -- creation & editing of a humorous short video.

First some nerd & creative details making a "feasibility" & "tease" video -- and Second, hit some key management lesson learned from making a "simple" video ("Hey, what's the big deal -- my 5 year old did that on his iPad?" -- Yes, he did. Being 5 years old is a key factor: At this early age, the mind is free of too many life assumptions).

Project Challenge: Quick & simple generation of video & audio special effects? A humorous Alien takeover of video signal? And less ominous and spooky than 1963 Outer Limits intro roll -- "We are controlling transmission." (see 60 sec intro video)

More specific video creation challenge: Create & overlay TV Snow with "only" a Linux-Win32/64 laptop? How does a video creator-editor add TV Noise (snow) to a short & fun promo video clip -- without resorting to some complex Rube Goldberg mix of an A/D converter & digital recording from some noisy analog source? In other words, how to avoid cabling together a herd of odd & old video boxes to obtain "junk" video for VFX-SFX (special effects)?

Quick Answer: Use "simple" command line tools like FFMPEG & FFPLAY. Reverse 40+ years digital video evolution -- and synthetically generate old TV snow -- to "fuzz up" a perfectly good video clip -- for fun and profit.

Quick Look Result? Here is 8 sec simple test & project budgeting feasibility video clip -- that proved out the FFMPEG VFX-SFX approach illustrated below.

8 sec Video: Alien noise signal takeover.

Goals & Director Details? Starting with "the script":

- Cold open into TV "snow" (video & audio noise), just like the "old days" when the analog TV powered up on a "blank" channel.

- Cross fade from TV snow to prefix Intro slide (changing the channel or TV station coming "in range")

- Cross fade via TV snow from intro slide to cartoon Alien (the "episode" segment -- the Alien "take over").

- Cross fade to trailer Outro slide then fade to black.

(1) Seed the TV snow transition/cross fade video clip: Generate a very small video & audio from random numerical sources at QQVGA resolution (160x120 pixels, 4:3 aspect):

# -- Generate 5 secs QQVGA res video. Signal? random number source

# -- Note that filter_complex comes in two parts:

# (a) Video gen: "geq=random(1)*255:128:128"

# (b) Audio gen: "aevalsrc=-1.5+random(0)"

#

ffmpeg -f lavfi \

-i nullsrc=s=160x120 \

-filter_complex "geq=random(1)*255:128:128;aevalsrc=-1.5+random(0)" \

-t 5 out43.mkv

Playing created video "out43.mkv" -- a screen shot preview:

Synthetic Random TV snow

(video noise & audio)

(2) Up Convert & expand 160x120 video to "nearest" & "next" 16:9 video aspect -- 854x480 pixels (aka FWVGA). This step will expand & "smear out" small pixels into a more analog realistic set of "blurry" noise pixels. Audio is just copied across. Command line details:# --- Now resize to FWVGA (smallest 16:9 aspect "std") ---

# --- Note use of "-aspect" to "force" Mplayer & others to use the

# --- DAR (Display Aspect Ratio) and no rescale to 4:3 or some other

#

ffmpeg -i out43.mkv -vf scale=854:480 -aspect 854:480 out169.mkv

#

# Note and caution: By default FFMPEG version 3.3.3-4 generates

# pixel_fmt "yuv444p" & often will NOT play on iPhones,

# some versions of iMovie, QuickTime and Kodi media player

# (Kodi just blinks & ignores) Correction? Two options:

# (1) add command line switch "-pixel_fmt yuv420p"

# (2) or make certain video editing & video rendering

# engine outputs yuv420p pixel format (OpenShot!).

Output & Screen shot result?

Noise pixels expanded to 854x480 for

video overlay & fade to "Alien Takeover"

(3) Generate Intro & Outro slides using ImageMagick and BASH script -- this is VERY FAST and saves the video rendering engine ("melt" in OpenShot) much computational work by stacking many static images into 4-10 secs of video:#!/bin/bash

# ==========================================================

#

# Revised: 14-Jan-2017 -- Generate Slide from Text String

#

# See:

# http://www.imagemagick.org/Usage/text/

# http://www.tldp.org/LDP/abs/html/loops1.html

# http://superuser.com/questions/833232/create-video-with-5-images-with-fadein-out-effect-in-ffmpeg

#

# Hint for embedded newlines (double slash):

# ./mk_slide_command_arg.sh How Long\\nText Be?

#

# ==========================================================

if [ $1 ];then

# echo "First Arg: " $1

/usr/bin/convert -background black \

-fill yellow \

-size 854x480 \

-font /usr/share/fonts/truetype/dejavu/DejaVuSans-BoldOblique.ttf \

-pointsize 46 \

-gravity center \

label:"$*" \

slide_w_text.png

else

echo "Need at least one predicate object token"

echo "Try: " $0 " A_Slide_String_w_No_Quotes "

# ./mk_slide_command_arg.sh How Long\\nText Be?

fi

#

Static Slide / Pix for Intro

Static Slide / Pix for Outro

(4) Generate "injected" Alien signal / slide -- the "episode" segment -- using a simple cartoon creation with a digital paint program -- and on 854x480 pixel canvas (16:9 aspect ratio):

"Injected" Aliend signal slide

(cartoon) from paint program

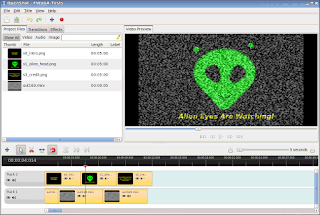

(4) Video-Edit & Merge intro, episode & outro slides -- as video segments -- and use synthetic TV snow cross fade transitions. In this example -- OpenShot 1.4.x was used. A screen shot from edit session -- note TV snow video is in lower track -- and "fills" when upper tracks "fade" to black. This yields the viewer impression that some nefarious "Alien" signal is being injected ("Controlling your TV!"):

ScreenShot: OpenShot 1.4.3 Video

Editor w/ time line loaded segments

and cursor at 4.0 secs

(5) Render / trans-encode video segments into a common video / audio format -- and container. In this case libx-h264 for MP4 video and AAC for audio. (6) Results? Scroll back to 8 sec YouTube clip above. We started with the end result. Yes, kinda "simple" and childish -- but please recall the goals: (a) time & budget estimation for video creation & editing, (b) Avoiding wiring together a bunch of old hardware & video signal generator to make a small VFX-SFX effect, and most important (c) A simple "laugh test" to check if the promo-Ad approach was a useful "viewer outreach" path.

Lessons Learned #1: Surprise! iPhone, iMovie, Quicktime, Kodi Media player -- and others -- can bomb (fail) with certain color pixel formats? Apparently iPhones are strangly spooky. Say it ain't so, Steve Jobs. Corporate software policy or institutionalized bug? See iOS and Quicktime details. Also see significant answer by DrFrogSplat from Jan 2015.

Intro slide from 1963 TV

show "Outer Limits"

Lesson Learned #2 from creative side -- Perspectives and Deeper History: Sometimes many years of experience & exposure can confound & confuse the creative process -- and fog "simple" workflows. Ghosts of a past technical life muddles & blocks the mind -- and reaching back to a 5 year child like mindset -- is often required. "How would a 5 year old make this 'tease' video?"

Master Control" TV

Switcher Room

Back in fall 1980, this author worked as a "weekend program switcher" in "Master Control" for PBS station KNEW TV-3. Lots of expensive equipment. $50K and $100K video processing "boxes" everywhere. Job was tough Saturday & Sunday gig -- 5 AM to 6 PM -- unlock, power up the studio & switching equipment, carefully energize the 100 kilowatt transmitter, setup multiple VTRs for satellite "dubs" of time-shifted TV programs (i.e. video downloads), feed promos (Ads) & TV programs to the transmitter -- all according to a ridged program schedule -- and then rinse, wash & repeat every 30-60 mins.

1980s broadcast quality video tape:

a 1969 RC 2" inch quadruplex VTR

And much on and off air exposure to Sesame Street, The Electric Company, Master Piece Theater, etc etc. To add turbulence, broadcast students would drop in to edit project videos.Legacy Thinking? The fall 1980 weekend gig was great experience -- but filled my head with lots of legacy hardware / software / video production perspectives -- and 36+ years of technology has marched on -- all that old hardware & video production is long gone.

Or not? Color Mathematical Models to save bandwidth?

Lesson Learned #3: Turns out the video signal theory & processing practice of analog TV & video are not 100% "gone" -- but live on in creation & editing of "modern" digital video -- even for "simple" jobs -- or for "eye catcher" web publishing-promotion -- or for distribution via YouTube / Vimeo / etc.

TV Picture: Numerically "perfect"

colorbars for video signal "color burst"

phasing & "hitting" color calibration

points in Ostwald "color solid"

(see below)

colorbars for video signal "color burst"

phasing & "hitting" color calibration

points in Ostwald "color solid"

(see below)

The spirit of Analog color -- and human perceptions -- "lives on" in digital video: While the technical details are gory and deep -- suffice to say -- playing with FFMPEG was a pleasant surprise -- I had forgot about details like YUV color space and 444 vs 420 pixel encoding. And it was a shocking surprise that iPhones, iMovie, Apple Quicktime, Kodi media player and others can -- and do -- "blow up" with yuv444p default pixel format of FFMPEG.

From Wikipedia, HCL color model

(double cone) and is foundation

for YUV color model of old

analog video & "buried" in pixel

formats of digital video.

This "old" but very sophisticated YUV "blending" & "tinting" of color -- over black & white "backbone signal" -- a legacy of the 1950s TV tech transition from Black & White to Color -- is not "dead" -- but alive as an almost corporeal "ghost" in "modern" digital video editing & processing. Why alive? Human perception of color & movement in cluster clouds of analog or digital pixels has not changed. The hardware has change much -- but how humans "see" has not. And exploiting how humans "see" still needs to be exploited to reduce bandwidth and video storage costs -- this demand has not change in 30+ years.

How does YUV magic happen? Pictures are worth 1000s of words -- some old 1980s -- and some 1916 color theory -- that hint at the hardware & software technique used -- and help video renderings "reach into the mind" of the viewer -- and "exploit" their color & movement perceptions -- while also "compressing" the signals / data storage costs.

Vectorscope plot of "perfect"

colorbars test signal & scope

beam "hitting" the calibration

marks for Red, YL, Green, CY, Blue

and unseen black to white running

up & down the circle center.

From Wikipedia HSL and HSV "color solid";

The Vectorscope "lives" in the equatorial belt

of Ostwald's 1916 color concept & the

vectorscope "hits" specific theoretical

colors in this solid to "stablize" video

colors.

From Wikipedia HSL and HSV "color solid";

The Vectorscope "lives" in the equatorial belt

of Ostwald's 1916 color concept & the

vectorscope "hits" specific theoretical

colors in this solid to "stablize" video

colors.

SUMMARY: When you are in college -- or attending formal technical school -- a lot of theory is emphasized -- and as a student -- you wonder "when are we gonna get to some practical 'hands on' manipulations?" The value of abstract theory is often not appreciated until many years later -- when the theory allows "seasoned students" to "see the unseen" -- when "modern" software stumbles ("Huh? iPhone cannot render YUV444P format pixels? What does that mean?") -- even if masked by 35+ years of technological change. Viva la abstractions!