Author Greg Wilson tossed out this thought -- a useful gold nugget -- and I am paraphrasing from memory:

"[Clients, Customers and] Users care about their data -- and really do not care about processing systems ... Data can live forever ... systems do not ... Just ask Russians doing genealogy on now defunct Soviet era computers."

This is very important idea to remember when mining & extracting "value" from data. Here is a "old" example -- old in terms of the Internet's young age.

Background: Nov 1994 web data? Possible to bring digital data back to to life -- from 1994? And for use in current events compare & contrast? As a result of May 2018 news -- and reminders from past work associates -- regarding the most recent Lava fissure eruption along the east rift zone of Kilauea volcano of Hawaii -- some brain triggers sparked.

In the deep corners of my mind -- I recalled some quick look, site analog GIS-mapping -- a fast compare and contrast -- of the Kilauea site -- to some remote risk sites in Kamchatka Peninsula of Russia. The 1994 question was roughly this: Can we use JPL processed Synthetic Aperture Radar (SAR) -- specifically Space Shuttle Interferometric Radar (InSAR) data -- to remotely explore in Kamchatka?

Goal? Extract terrain displacements & subsurface lava movement around the Tolbachik volcano complex?

Motivation? The Tolbachik volcanic site complex showed potential -- at providing risk estimates -- comparable to Volcanic Hazards at the proposed nuclear waste respository at Yucca Mountain Nevada, USA. And survey field work for places like Tolbachik was difficult & expensive. Maybe there was "more cost effective" satellite data to the rescue? At least for the preliminary studies?

Now the tough part for today: Did any GIS-mapping data survive from the 1994 archives?

Surprise! Yes. In a 1995 TAR archive file -- found on a 1999 ISO 9660 CDROM. And the CDROM remained 98% readable -- with just a few errors. The key Kilauea quick look "test" files were in a strange name folder "Xmosaic". Huh? Let us recall -- in 1994 -- the world wide web was very young -- and one of the few viable web browsers was NCSA Mosaic -- and Mosaic would "drop" downloaded files to a folder called "XMosaic".

And to make data recovery more tricky -- my Mosaic version was running and storing data on a long since dead desktop 50 mhz Sun SparcStation -- running a variation of *NIX called SunOS.

Fortunately the ISO 9660 standard has "survived" much better than tricky Kodak Photo CD.

Reuse in 2018 -- find & extract key files -- and "upscale" the data value for use compare & contrast to today's events:

Fast result? Raw pix first -- with Oct 1994 UNIX timestamp:

2018 Quick ReMapping and GeoRegistered KML and ground overlay image drape: Data value "upscale" of this 1994 image -- into a "modern" GIS-mapping tools like Google Earth -- screen shot preview of image drape / image overlay setting:

Ready to Go KML-KMZ file? For those using Google Earth in your GIS-mapping work, the image overlay KMZ can be fetched from this link..

UPSHOT? Lesson? Open standard file formats are our friend. Unlike the Kodak Photo CD example, these files were stored in bit-byte formats -- and on media -- that had a recovery path -- far beyond the lifespan of the original hardware and software. Begin with the end in mind -- please think 20+ years ahead -- to aid data value extractions and future re-combinations.

Ref:

[1] Original HTML caption for Oct 1994 InSAR radar image from JPL website -- and the updated version:

354-5011

PHOTO CAPTION P-44753

October 10, 1994

Kilauea, Hawaii

Change Map

This is a deformation map of the south flank of Kilauea volcano on the big island of Hawaii, centered at 19.5 degrees north latitude and 155.25 degrees west longitude. The map was created by combining interferometric radar data -- that is data acquired on different passes of the space shuttle which are then overlayed to obtain elevation information -- acquired by the Spaceborne Imaging Radar-C/X-band Synthetic Aperture Radar during its first flight in April 1994 and its second flight in October 1994. The area shown is approximately 40 kilometers by 80 kilometers (25 miles by 50 miles). North is toward the upper left of the image. The colors indicate the displacement of the surface in the direction that the radar instrument was pointed (toward the right of the image) in the six months between images. The analysis of ground movement is preliminary, but appears consistent with the motions detected by the Global Positioning System ground receivers that have been used over the past five years. The south flank of the Kilauea volcano is among the most rapidly deforming terrains on Earth. Several regions show motions over the six-month time period. Most obvious is at the base of Hilina Pali, where 10 centimeters (4 inches) or more of crustal deformation can be seen in a concentrated area near the coastline. On a more localized scale, the currently active Pu’u O’o summit also shows about 10 centimeters (4 inches) of change near the vent area. Finally, there are indications of additional movement along the upper southwest rift zone, just below the Kilauea caldera in the image. Deformation of the south flank is believed to be the result of movements along faults deep beneath the surface of the volcano, as well as injections of magma, or molten rock, into the volcano’s "plumbing" system. Detection of ground motions from space has proven to be a unique capability of imaging radar technology. Scientists hope to use deformation data acquired by SIR-C/X-SAR and future imaging radar missions to help in better understanding the processes responsible for volcanic eruptions and earthquakes.

Spaceborne Imaging Radar-C and X-band Synthetic Aperture Radar (SIR-C/X-SAR) is part of NASA’s Mission to Planet Earth. The radars illuminate Earth with microwaves, allowing detailed observations at any time, regardless of weather or sunlight conditions. SIR-C/X-SAR uses three microwave wavelengths: L- band (24 cm), C-band (6 cm) and X-band (3 cm). The multi- frequency data will be used by the international scientific community to better understand the global environment and how it is changing. The SIR-C/X-SAR data, complemented by aircraft and ground studies, will give scientists clearer insights into those environmental changes which are caused by nature and those changes which are induced by human activity. SIR-C was developed by NASA’s Jet Propulsion Laboratory. X-SAR was developed by the Dornier and Alenia Spazio companies for the German space agency, Deutsche Agentur fuer Raumfahrtangelegenheiten (DARA), and the Italian space agency, Agenzia Spaziale Italiana (ASI), with the Deutsche Forschungsanstalt fuer Luft und Raumfahrt e.V.(DLR), the major partner in science, operations and data processing of X-SAR. #####

Lava flow Pix from Wikipedia

InSAR basic flight-orbit line & Radar

Data collection ground swath

(from InSAR wiki page)

Goal? Extract terrain displacements & subsurface lava movement around the Tolbachik volcano complex?

Google Terrain map detail:

Fissure eruption analog site

south of Tolbachik volcano.

(click for larger)

Motivation? The Tolbachik volcanic site complex showed potential -- at providing risk estimates -- comparable to Volcanic Hazards at the proposed nuclear waste respository at Yucca Mountain Nevada, USA. And survey field work for places like Tolbachik was difficult & expensive. Maybe there was "more cost effective" satellite data to the rescue? At least for the preliminary studies?

Now the tough part for today: Did any GIS-mapping data survive from the 1994 archives?

Surprise! Yes. In a 1995 TAR archive file -- found on a 1999 ISO 9660 CDROM. And the CDROM remained 98% readable -- with just a few errors. The key Kilauea quick look "test" files were in a strange name folder "Xmosaic". Huh? Let us recall -- in 1994 -- the world wide web was very young -- and one of the few viable web browsers was NCSA Mosaic -- and Mosaic would "drop" downloaded files to a folder called "XMosaic".

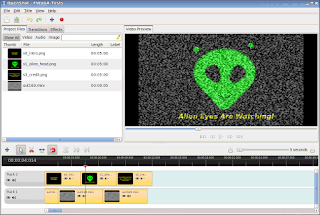

1995 Logo for NCSA Mosaic Web Browser

And to make data recovery more tricky -- my Mosaic version was running and storing data on a long since dead desktop 50 mhz Sun SparcStation -- running a variation of *NIX called SunOS.

Fortunately the ISO 9660 standard has "survived" much better than tricky Kodak Photo CD.

Reuse in 2018 -- find & extract key files -- and "upscale" the data value for use compare & contrast to today's events:

Fast result? Raw pix first -- with Oct 1994 UNIX timestamp:

Found Oct 1994 file in GIF format

See original HTML caption below

(slightly updated version at JPL)

2018 Quick ReMapping and GeoRegistered KML and ground overlay image drape: Data value "upscale" of this 1994 image -- into a "modern" GIS-mapping tools like Google Earth -- screen shot preview of image drape / image overlay setting:

Google Earth Screenshot of Oct 1994

InSAR displacement via KML ground overlay

Ready to Go KML-KMZ file? For those using Google Earth in your GIS-mapping work, the image overlay KMZ can be fetched from this link..

UPSHOT? Lesson? Open standard file formats are our friend. Unlike the Kodak Photo CD example, these files were stored in bit-byte formats -- and on media -- that had a recovery path -- far beyond the lifespan of the original hardware and software. Begin with the end in mind -- please think 20+ years ahead -- to aid data value extractions and future re-combinations.

Ref:

[1] Original HTML caption for Oct 1994 InSAR radar image from JPL website -- and the updated version:

354-5011

PHOTO CAPTION P-44753

October 10, 1994

Kilauea, Hawaii

Change Map

This is a deformation map of the south flank of Kilauea volcano on the big island of Hawaii, centered at 19.5 degrees north latitude and 155.25 degrees west longitude. The map was created by combining interferometric radar data -- that is data acquired on different passes of the space shuttle which are then overlayed to obtain elevation information -- acquired by the Spaceborne Imaging Radar-C/X-band Synthetic Aperture Radar during its first flight in April 1994 and its second flight in October 1994. The area shown is approximately 40 kilometers by 80 kilometers (25 miles by 50 miles). North is toward the upper left of the image. The colors indicate the displacement of the surface in the direction that the radar instrument was pointed (toward the right of the image) in the six months between images. The analysis of ground movement is preliminary, but appears consistent with the motions detected by the Global Positioning System ground receivers that have been used over the past five years. The south flank of the Kilauea volcano is among the most rapidly deforming terrains on Earth. Several regions show motions over the six-month time period. Most obvious is at the base of Hilina Pali, where 10 centimeters (4 inches) or more of crustal deformation can be seen in a concentrated area near the coastline. On a more localized scale, the currently active Pu’u O’o summit also shows about 10 centimeters (4 inches) of change near the vent area. Finally, there are indications of additional movement along the upper southwest rift zone, just below the Kilauea caldera in the image. Deformation of the south flank is believed to be the result of movements along faults deep beneath the surface of the volcano, as well as injections of magma, or molten rock, into the volcano’s "plumbing" system. Detection of ground motions from space has proven to be a unique capability of imaging radar technology. Scientists hope to use deformation data acquired by SIR-C/X-SAR and future imaging radar missions to help in better understanding the processes responsible for volcanic eruptions and earthquakes.

Spaceborne Imaging Radar-C and X-band Synthetic Aperture Radar (SIR-C/X-SAR) is part of NASA’s Mission to Planet Earth. The radars illuminate Earth with microwaves, allowing detailed observations at any time, regardless of weather or sunlight conditions. SIR-C/X-SAR uses three microwave wavelengths: L- band (24 cm), C-band (6 cm) and X-band (3 cm). The multi- frequency data will be used by the international scientific community to better understand the global environment and how it is changing. The SIR-C/X-SAR data, complemented by aircraft and ground studies, will give scientists clearer insights into those environmental changes which are caused by nature and those changes which are induced by human activity. SIR-C was developed by NASA’s Jet Propulsion Laboratory. X-SAR was developed by the Dornier and Alenia Spazio companies for the German space agency, Deutsche Agentur fuer Raumfahrtangelegenheiten (DARA), and the Italian space agency, Agenzia Spaziale Italiana (ASI), with the Deutsche Forschungsanstalt fuer Luft und Raumfahrt e.V.(DLR), the major partner in science, operations and data processing of X-SAR. #####